Singularity AW

Apart from the rain, we had a great after work with lots of interesting discussions. Luckily, we decided to sit in door.

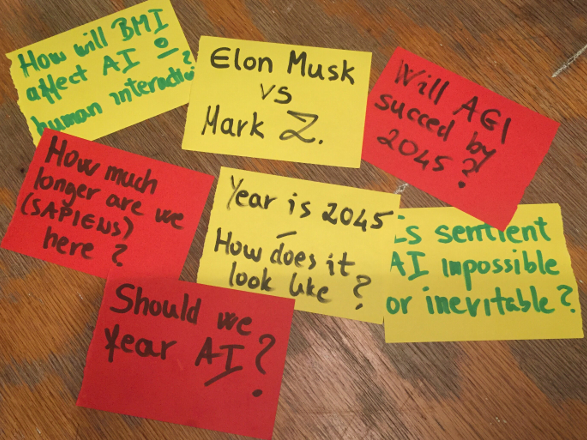

For the event Marcus and Melina had prepared a couple of questions, and though we do not claim that we have any answers, here are some of the thoughts from the evening.

Elon Musk vs Mark Z.

Shut up! Both are creating too much focus for and against the progression of AI. There are so many interesting things that are going on in between that we should give more focus.

Elon seem to scare people with the notion that a small group of people will rule the world and that is why it is important that the technology is open source. But to run the large models you need large amount of processing power and that is still only available to a few people. Mark want us to share all our data so that he can tell us what we want to buy. By also pretending to help us live better lives.

How will BMI affect AI and human interaction

BMI (Brain Machine Interface) is probably the next evolution of our technology. Mainframe and punch cards, computer and keyboard, phone and touch screen, smart watch and voice commands, brain connected to the internet... If you do not know how to use a computer today you might have a hard time getting a job. In the future, you might not get a job if you do not have your brain connected to the hive. "Resistance is futile, you will be assimilated".

Is sentient AI impossible or inevitable?

The big questing here is if sentient AI is the goal of the universe. We continuously push the limit on what intelligence is. As soon as we develop a technology we say that it has intelligence, but soon after it is just another tool that we use to get things done, and we find flaws that we need to improve. And if this AI become as intelligent as humans it will probably not have the same drives. Why should a robot care about its look, other than to manipulate humans? It does not need to go to the gym, it does not care about the tastes of different food, it does not need sleep. So, it will probably not compete against humans. But as we see the evolution of technology improving the algorithms ability to register objects, understand text and have abilities that allow robots to move around it is not hard to imagine the next step in the evolution of the machines that they will start to connect these senses into some basic cognitive functions and then as we add more layers of logic on top of that they will get increasingly intelligent and have a better sense of the world they are operating in. But the big question is will they be self-aware and start to create their own agenda or if they will stay obedient servants to the humans.

How much longer are we (SAPIENS) here?

Are we still here? Is AI our biggest existential threat? Some say 1 million years until the natural evolution has changed us into another species. Will we evolve into cyborg sapiens or will the colonization of mars lay ground for the evolution of a new breed of sapiens more suited for mars?

Year 2045 - what does it look like?

Will the machines do all the work necessary for human survival. Will we all live on a Human Basic Income? Freedom breeds creativity and creativity gives us pulpous, but before we get there we will probably see many depressed people until they figure out what they want to do with their lives. Y-combinator is running an interesting experiment on this topic worth looking at.

Should we fear AI?

Too many unknown variables, fear is not a sustainable state to be in but many things will change soon and the world will not be the same afterwards. We should try to proceed with caution. But the feeling is that even though some people want to resist, there is too much to gain from improvements in this kind of technology that we cannot really stop the evolution.

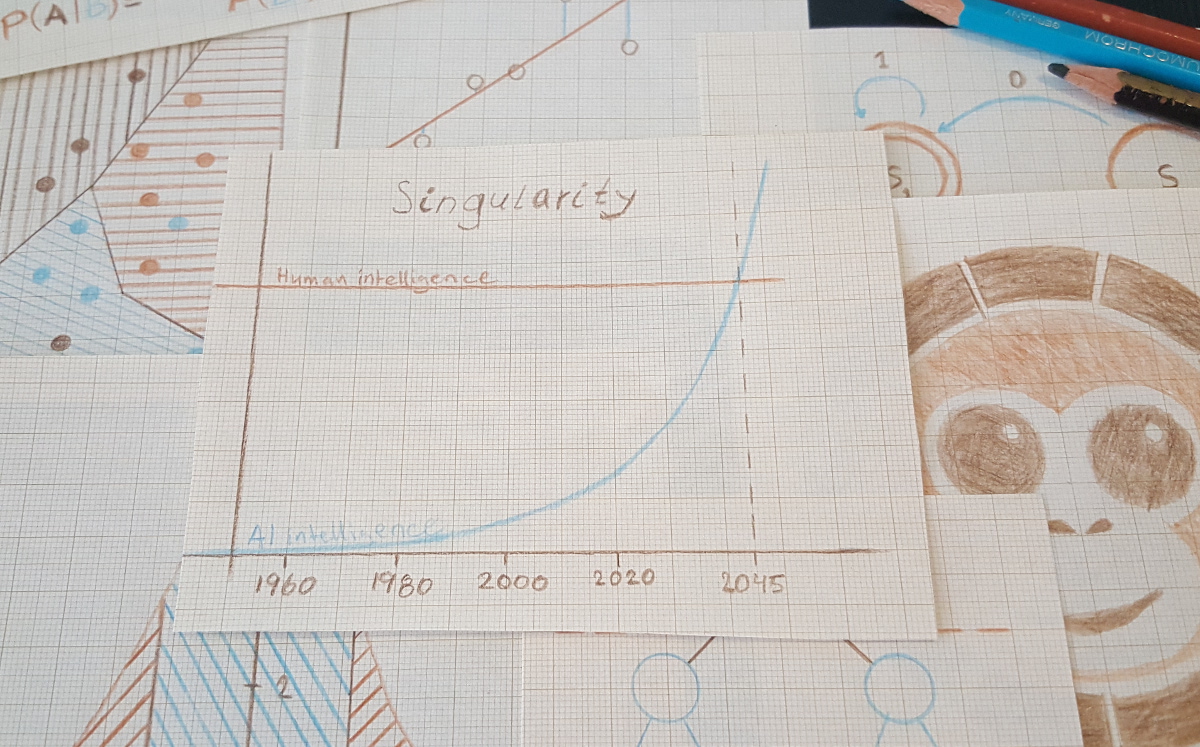

Will AGI succeed by 2045?

If we look at the progress in the field of ML and AI lately from simple calculations to logic selection, learning systems, image recognition and speech. It is not hard to imagine the combination of all these solutions creating a more aware AI that can make predictions about what we humans are about to do in different situations. And from that prediction give us relevant information before we know that we need it, then evolve to smarter systems that can emulate our behavior. And that would not be the end. It is hard to imagine what an exponential future will look like, but an AGI (Artificial General Intelligence) could be here before we realize that we got one. And the next day we will be far behind.

If you do not want to fall behind join us in our events where we will talk about these topics and share knowledge on ML and AI.

- Afterwork