Bayesian predictive inference machines

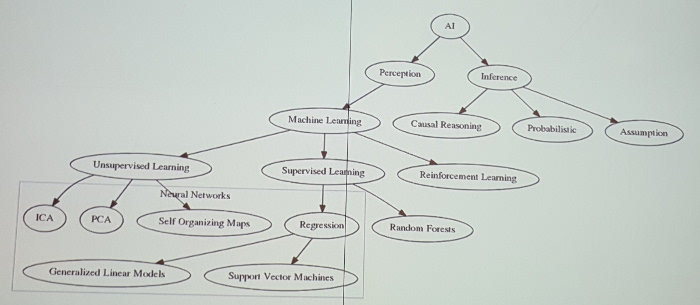

Perception vs. Inference

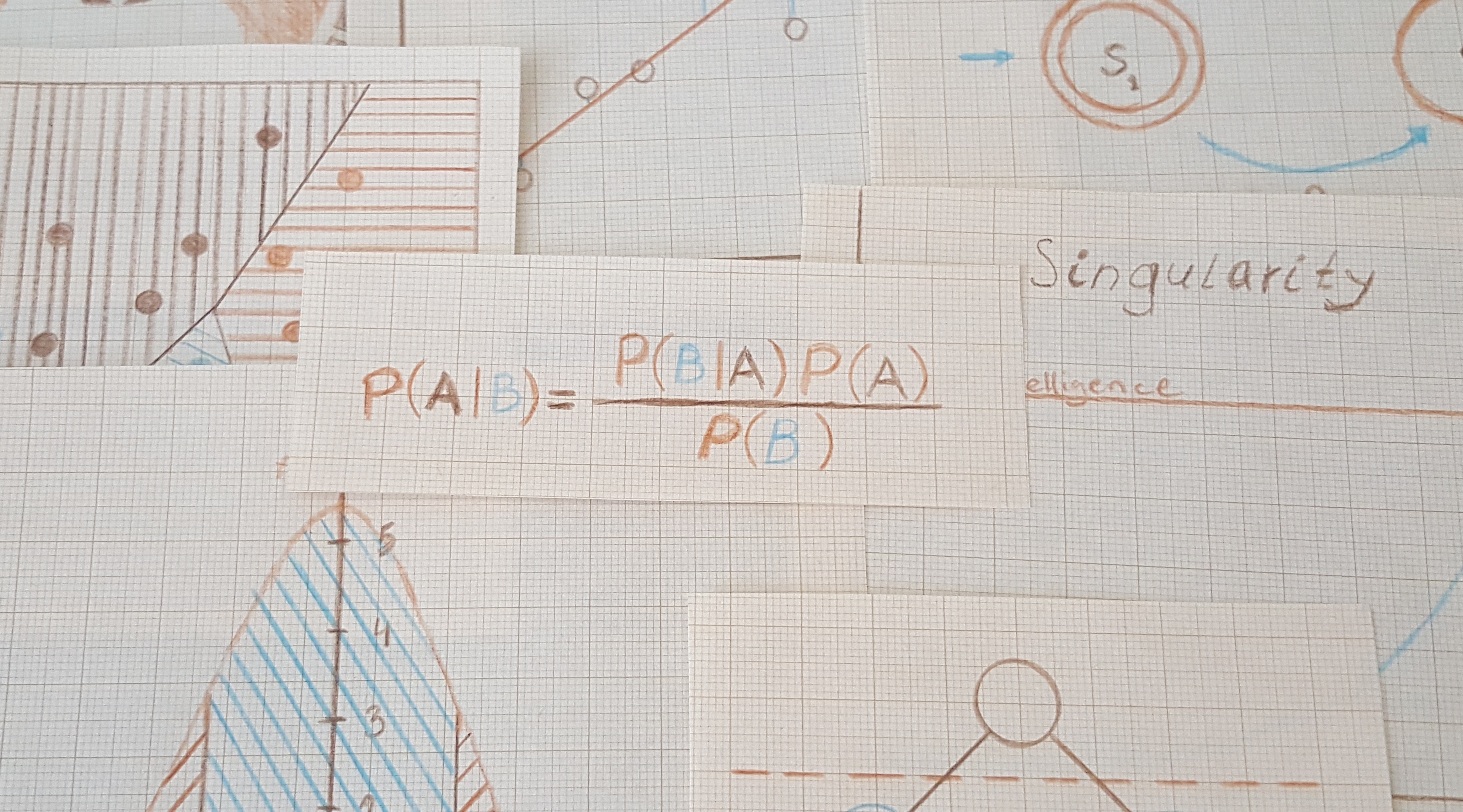

How would you want to base your next decision on the average opinion of everyone in the world or would you like to make a decision base on your own values and beliefs. Dr. Michael Green suggests that using some of the most popular models from machine learning today, depending on perception like neural nets, are like just fitting a line to existing data. Regardless of how deep your network is, the network does the exact same thing. You may configure it differently to get representations in different layers and make it more effective, but you are still only looking at what is part of your existing training sample. What you can do with Bayesian predictive inference machines using Probabilistic programming is to give the algorithm room for doubt and the possibility for the model to disproof your assumption. Where a perception model only gives you one answer that fits your model without questioning it.

This sounds a lot like the point made by Geoffrey Hinton in the article Artificial intelligence pioneer says we need to start over, were he also argues that backpropagation probably is the wrong approach. There is nothing that suggests that backpropagation will provide any kind of intelligence. It is only an advanced way of building statistical models. If the model does not fit the data well enough you just throw some more neurons at it and you’re done. But just math and the statistical models will not represent the context that is a crucial part of intelligence and understanding.

General intelligence

We also touched on the topic of general artificial intelligence and how it might come to be. The question is what goal the AI will have and in comparison, Dr. Green suggested that our human goal, more or less is to optimize for future freedom. But freedom means different things for each person, and the question is if the AI also will try to optimize for freedom. It will probably have a longer timeframe than humans, and will most probably be able to think in many more steps ahead. We can only hope that if the AI become sentient it will be like in the movie HER rather than Terminator.

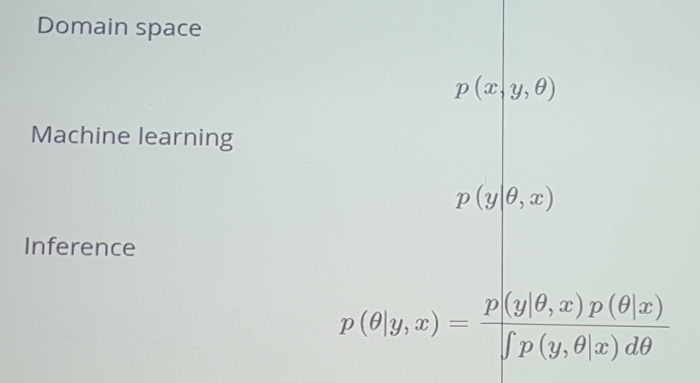

In order to become intelligent you need to be able to do Inference. When the field of AI was young a scientist (whose name I do not recall) cheated and said that p(θ|x), the prior probability, will not change so we can ignore it and the p(y,θ|x)dθ, the evidence is too much to take into account, and since θ will not change that much anyway we can ignore this as well. And so all researchers pursued p(y|θ,x), the liklihood and ignored the rest of the equation for calculating inference.

Recommended books

A Bayesian Course with Examples in R and Stan - by Richard McElreath

Handbook of Markov Chain Monte Carlo - by Steve Brooks, Andrew Gelman, Galin L. Jones and Xiao-Li Meng

Make sure to get the 3rd edition of this one

Bayesian Networks for Probabilistic Inference and Decision Analysis in Forensic Science - by Franco Taroni, Alex Biedermann, Silvia Bozza, Paolo Garbolino, Colin Aitken

Bonus pdf while searching for the books... Probabilistic Networks — An Introduction to Bayesian Networks and Influence Diagrams

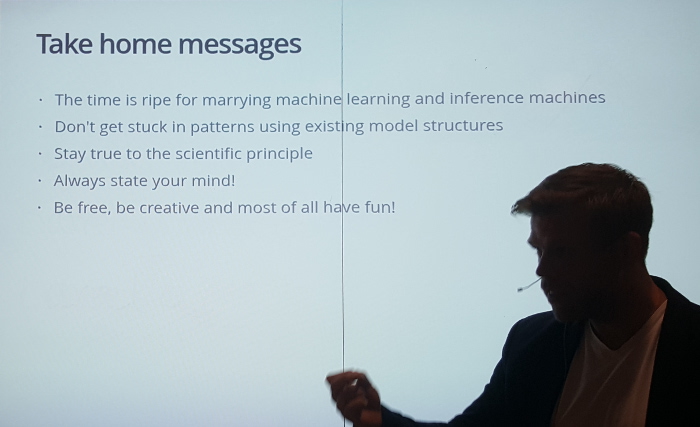

Final words from the presenter

We thank Dr. Green for an interesting presentation and her is what he wants us to take home from his talk.

The presentation was recorded by FooCafe and we will provide a link to it as soon as it is released.

The presentation was recorded by FooCafe and we will provide a link to it as soon as it is released.

Here are the slides from the presentation.

- Bayesian, Machine Learning, AI